COCO: COmparing Continuous Optimizers

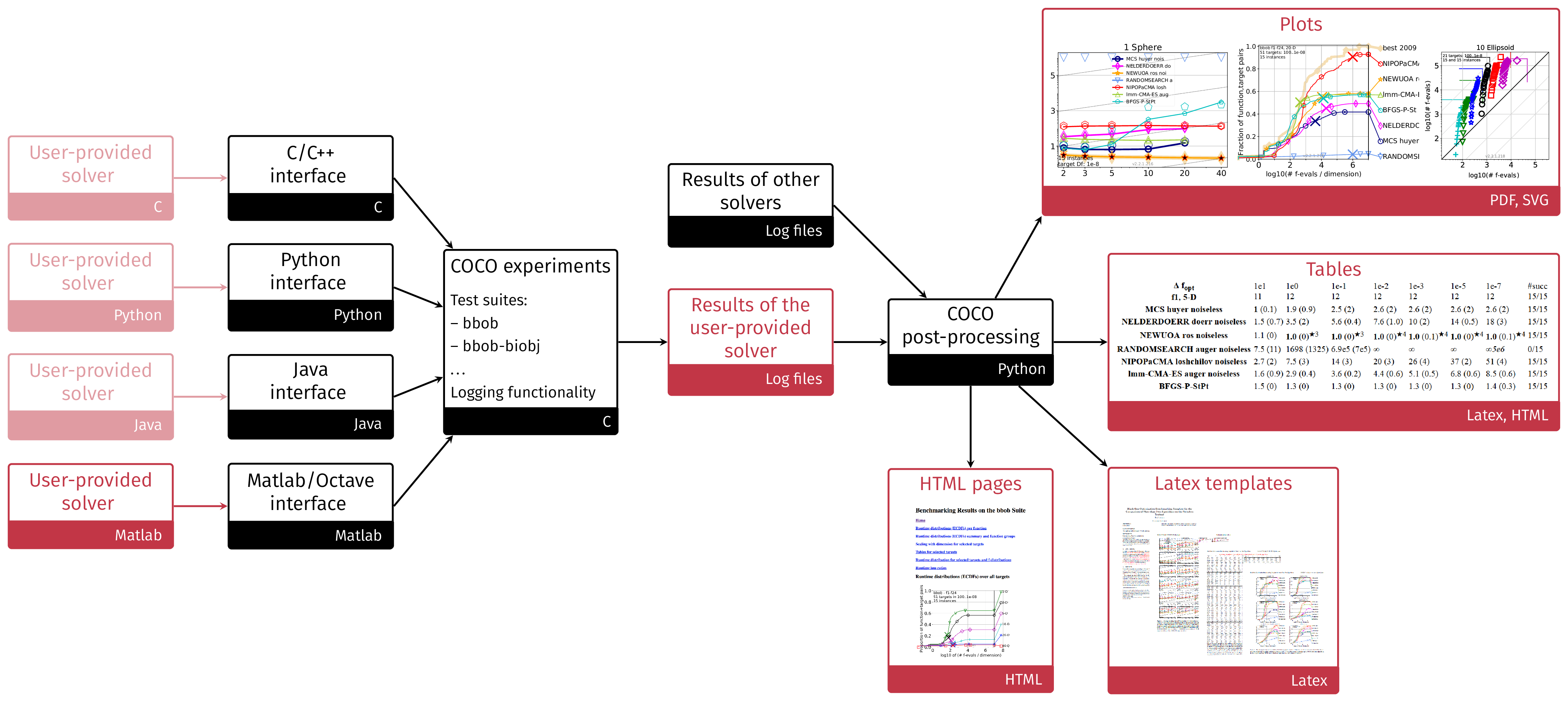

COCO is a software platform for a systematic and sound comparison of mainly continuous and mixed optimization algorithms. COCO provides implementations of

- benchmark function testbeds,

- experimentation templates which are easy to parallelize,

- tools for processing and visualization of the data generated by one or several optimizers.

For a general introduction to the COCO software and its underlying concepts of performance assessment see Hansen et al. (2021). For a detailed discussion of the performance assessment methodology see Hansen et al. (2022). For getting started see Getting Started.

Citation and References

You may cite this work in a scientific context as

Hansen, N., A. Auger, R. Ros, O. Mersmann, T. Tušar, D. Brockhoff. COCO: A Platform for Comparing Continuous Optimizers in a Black-Box Setting, Optimization Methods and Software, 36(1), pp. 114-144, 2021. [pdf, arXiv]

@article{hansen2021coco,

author = {Hansen, N. and Auger, A. and Ros, R. and Mersmann, O. and Tu{\v s}ar, T. and Brockhoff, D.},

title = {{COCO}: A Platform for Comparing Continuous Optimizers in a Black-Box Setting},

journal = {Optimization Methods and Software},

doi = {https://doi.org/10.1080/10556788.2020.1808977},

pages = {114--144},

issue = {1},

volume = {36},

year = 2021

}Data Flow Chart

The COCO platform has been used for the Black-Box-Optimization-Benchmarking (BBOB) workshops that took place during the GECCO conference in 2009, 2010, 2012, 2013, 2015 – 2019, 2021 – 2023, and 2025. It was also used at the IEEE Congress on Evolutionary Computation (CEC’2015) in Sendai, Japan.

The COCO experiment source code has been rewritten in the years 2014-2015 and the current production code is available on our COCO Github pages (you find the links in the menu on the left under “Development”).